Privacy-Enhancing Technologies (PETs)

The overall goal of Bridge2AI is to generate flagship datasets and best practices for the collection and preparation of AI/ML-ready data to address biomedical and behavioral research grand challenges. A central challenge to allow such data to be (re)utilized and leveraged for biomedical advances is data privacy.

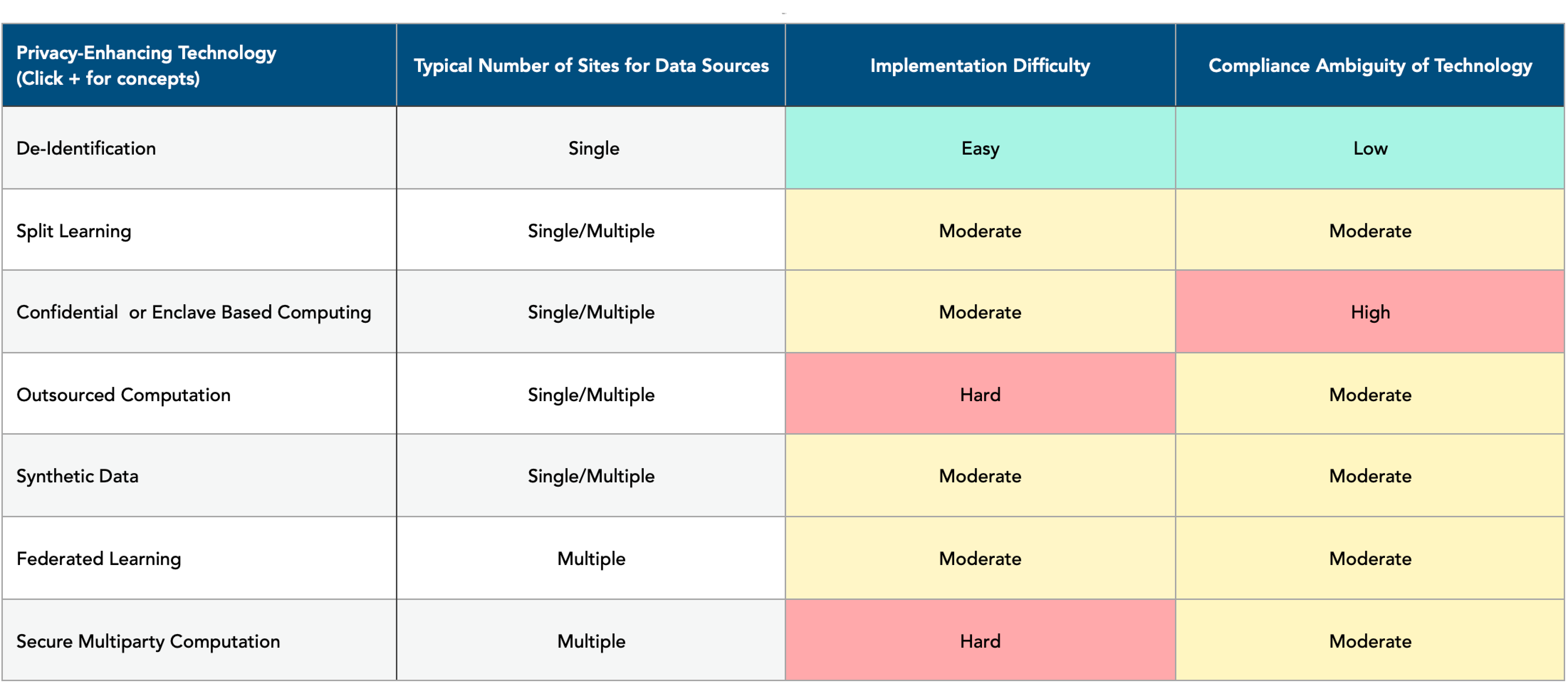

Here data privacy means keeping private data that could be potentially used to identify the individual who contributed to the data. There are several approaches to enhancing data privacy. The table below offers a notional view of these technologies (representative – not exhaustive). A recent review elaborates on some of these.¹

Some Characteristics of Privacy-Enhancing Technologies - A Rough Approximation

Concept Details of Privacy-Enhancing Technologies

De-Identification

These technologies remove sufficient information identifying an individual such that there is no reasonable basis that the residual information can be used to identify an individual (per examples – US Health Insurance Portability and Accountability Act/ EU General Data Protection Regulation/ US Common Rule).

A well studied approach is the family of k-based model(s) (e.g., k-anonymity), where in privacy is enhances such that the individual cannot be distinguished from k-1 others in the same dataset.¹·²

An alternate method is to add “Noise” (e.g., differential privacy) to the data such that it is difficult to determine the presence of any one individual.

Split Learning

Often the data is held in a secure environment (by client), while the modelers (providers/developers of new technologies for analysis and use) work in a different environment (say server) where they build AI/ML models. This approach splits the ML (deep learning) models into two pieces; one that runs the initial portion of the ML model in the client environment to the extent that the data is the layer is substantially devoid of identifiable content, termed the “smash layer”, and this smash layer is then transferred over to the server side for running the latter portion of the model. The premise is that the smash layer that is transferred over provides the privacy enhancement, and the overall ML model offers the learning for data use.

Confidential or Enclave Based Computing

This approach is based on creating a “protected zone” within the environment where the data is held and often knowledge of utilizing the data is needed. Inside this “protected zone” or tamper resistant confidential environment or enclave (hence the title), a model developer can provide the model for use, potentially including privacy information, but can pull out only the data that does not have any potentially identifying privacy information – achieved through a policy manager. This protected zone could be hardware-based (such as Intel SGX) or software-based.

Outsourced Computation

This approach is based on encrypting the data and the method of analysis with the client and processing the encrypted data using the encrypted analysis method and returning the results such that they can be decrypted for aggregation, understanding and use. Example – Homomorphic Encryption

Synthetic Data

The synthetic data approach involves generating artificial data that mimics the properties of real data. Real data is used to train models (e.g., via Generative Adversarial Networks (GANs), Variational Autoencoders (VAEs), or Diffusion Models (noise)) which generate artificial data sets. Here, input (real) data is fed to a Generator (generates synthetic data), and the generated data is fed to Discriminator (to figure out if the data is real or synthetic). If the discriminator consistently figures out generated data as such and the real data as such, then the system parameters are tuned till the Generator – Discriminator system is unable to distinguish between real and synthetic data – at this stage the synthetic data set is accepted as a privacy enhanced synthetic data set.

Federated Learning

The Federated Learning approach is approach, that of Split Learning discussed earlier; with one significant difference. In the Split Learning approach, the overall model runs on an individual data, potentially including identifiable information; while in Federated Learning, the entire model is run both the client (data) sites (this is applicable for multiple sites only), and the model parameters (gradients or network parameters), not data, are transferred to the server (model) site. It can be shown for federated learning that one cannot revert back to the original data. Hence this approach combined with others (such as differential privacy) can allow for synergistic performance.

Secure Multiparty Computation

Secure Multiparty Computation is very similar to Outsourced Computation (Homomorphic Encryption), except that here the encrypted data from different organization can be pulled together to run the model – leveraging the data from different programs. The advantage of this approach is that it gives an exact computation of the learning, rather than the approximation provided by Federated Learning.